Chapter Contents

- What is security?

- Basic definitions

- Can we define security?

- Security principles that guide design

- How to protect systems?

- Security layers

What is security?

A good way to start thinking about security is to understand the term security problem. Any system has a set of usage requirements. For example, a road connecting two locations has an allowed speed range in one specific direction, sometimes during particular time intervals, along with registration and technical requirements regarding the type of vehicle that uses the road. There are many such requirements in a traffic system or other similar systems that we use in our daily lives. When traveling, airport authorities require travel documents, a ticket, and security monitoring before allowing passengers to board the flight.

There are ways to go around the system's requirement in any scenario, and violate the rules under which the system operates. For example, a driver chooses to go in the wrong direction, or a passenger attempts to bypass the security monitoring procedures at the airport. Both are users of the system, know the rules that users must follow, and aim for violating the rules to achieve a specific objective. The violator could have financial motivations or aim to disrupt the system. In the context of security, we call these violations attacks and the violators are either adversaries or attackers. Adversaries use a procedure, a set of steps, to operate the system while violating the rules. For example, suppose that an adversary aims to bypass the security monitoring at the airport. To proceed to the gate for boarding, passengers must only have a single path that goes through the security monitoring area. If the airport security system has a flaw that allows two paths towards the gate, one with security monitoring and one without, the adversary can search for the second path, exploit it, and proceed to the gate without ever going through security monitoring. The system is thus vulnerable to an attack, and the attacker's path is an exploit for the vulnerability in the system design.

Basic definitions

To perform a systematic study of the concepts behind security, we use the definitions below.

System. A system provides services to a set of rules subject to regulations (rules).

Vulnerability. Provided a system user exploits and operates a system by violating the rules that govern the system, the procedure that operates the system under the condition as mentioned earlier is a vulnerability.

Attacker. An attacker is a system user that aims to violate the system's rules by exploiting a weakness intentionally.

Note that an attacker does not have to be a person. An attacker could be a person or a computer program that exploits the system. Also, a system does not need to be software. It could be a combination of software, hardware, and human operators. However, systems of interest here are primarily made of software components. For example, a web server has underlying hardware, a software stack, and a system administrator (human in the loop). Most of the problems we deal with concern vulnerabilities in the software stack and methods to provide security.

Security is a relative term. We cannot have absolute security or say we want to improve security without a contextual definition. Our approach in defining security is to start with a vulnerability and make our way towards security. That is, suppose that a system has no rules (for the sake of argument). For instance, we have a building that anyone could use at any time with no limit on usage. Then, our system is always secured against attacks since no attacks can be initiated (in the context of accessing the building) in the first place. But if we had to specify that anyone who enters the building must leave by 9 PM. If we leave the system description, any user can attack the system and remain past 9 PM. We have a security vulnerability if we install a security guard that forces users to leave before 9 PM, and our security guard does not strictly enforce the rule. With this system, the problem is to secure the system against intrusions that remain past 9 PM. If we could achieve that, we would have a relatively secure system. Systems are usually a lot more complicated. There are multiple ways to enter a system (e.g., ports to connect to a server), and thus there are various ways to bypass an authentication guard. For example, a naive design of a mobile operating system may have a mechanism to unlock the system's screen using a personal identification number (PIN) code while leaving the system vulnerable to unauthenticated access through a universal serial bus (USB). This design fault leads to a severe vulnerability, allowing an adversary with physical access to the device to attack it. The mobile operating system can even be vulnerable to more attack vectors. For example, the system can run services to receive notifications, text messages, or mail. These services allow remote intrusion to succeed if they suffer from authentication vulnerabilities.

According to Saltzer & Shroeder,

"the term security describes techniques that control who may use or modify the computer or the information contained in it."

Saltzer & Shroeder's definition of security is concise and elegant, but we need to establish more and limit security in terms of adversaries' abilities. Thus, we will modify the definition above to connect security with classes of vulnerabilities.

Can we define security?

Security. Techniques to protect a system's functions and data against specific classes of vulnerabilities that adversaries can exploit.

Vulnerabilities can be the results of two sources, a design flaw, or an implementation flaw. A design flaw leads to an open door for the attacker to enter and an implementation flaw that hasn't been captured by proper system testing. Design flaws lead to vulnerabilities because the system is not well-thought at the design stage. For example, the design of a communication protocol may have a vulnerability, allowing the attacker to misuse both ends of the communication to their advantage. The system can be well tested in implementation while suffering from severe design flaws.

In other cases, a system may be well-thought, have a clean and robust design, and have no major vulnerability. But the system may still suffer from an implementation flaw. For example, one may encrypt the traffic between a client and a server under the best possible design. Still, the network layer responsible for encrypted data transfer may mistakenly allow some of the data to be transferred in plaintext. This is not a design flaw but an implementation flaw that hasn't executed the design principles as they should.

In practice, we often deal with a mixture of design and implementation flaws that result in vulnerable systems. Avoiding this mixture requires that security requirements are built-in at the very early stages of system design. With existing (or commodity) systems we also need analytical tools and protection mechanisms that would find vulnerabilities to be fixed or protect against vulnerabilities that cannot be fixed at production level.

Security principles that guide design

Security revolves around three basic principles: integrity, confidentiality, and availability. The point of following these principles is to guide the engineering and testing processes to avoid potential vulnerabilities or to fix them when they appear. To get a better idea, imagine we're designing an e-government platform for patient registration. The system design process must follow integrity as a general property. Integrity means that the system protects functions and data provided to patients and does not allow unauthorized access to sensitive functions or data. Confidentiality guides the design to keep all users (patients) isolated from each others, all data remains secret unless with the consent of the user, and no unauthorized, unprotected data exchange is allowed in the data. Finally, all patients must always have access to the system at any time. Disruption in functions or availability of data undermines system security so our design has to maintain availability at all times.

How to protect systems?

From a high-level perspective, we need to follow three fundamental approaches to protect systems.

Design systems based on strong threat models and use state-of-the-art architectural and theoretical techniques to provide integrity, confidentiality, and availability.

Provide protective environments that enable a multi-layer approach to detecting potential vulnerabilities.

Continuously test systems for flaws and use an efficient re-engineering process to fix vulnerabilities.

Threat modeling and requirements

In the first approach, the assumption is that the system is in development. Following a proper software engineering process, the security requirements are integrated with system requirements early on. The requirements must be the results of a threat modeling process, leading to an understanding of the adversary, the attack vectors, and the possible points of failures. Threat modeling must be coupled with other processes during the design. This means that system developers should take into consideration performance and functionality requirements of the system. With a proper threat model, requirements can be set to secure the system against the foreseen attack vectors. The potential points of failure are identified for which security measures are designed.

An example scenario is with the healthcare system described earlier. Threat modeling results in denial of service (DoS) and data leak attacks as major attack vectors. The adversary may use client-side entry point to find data leak exploits (such as a SQL-injection exploit) or find weaknesses in one of the layers of the software stack to cause denial of service. System developers can incorporate the results of threat modeling into the requirements engineering process. We know that client-side entry points are potential points of failure. We could use filtration and sanitization as security measures. Further, we could employ an intrusion prevention system at the web server layer to filter messages that are likely to cause DoS.

Protective environments

In the second approach, the assumption is that the system is in production. Being skeptic of the design process, we provide protective systems in a layered approach. That is, the first layer of protection is the system itself with all the security measures. The second is the platform that executes the system, which are the operating system and the runtime environments. The third, is the hardware resource. We could continue with these layers to add a layer of protection on the communication network, add indirection by including other servers that relay the request. These layers are skeptic of each others. We add more layers to trap attacks that could bypass previous layers.

Security Layers

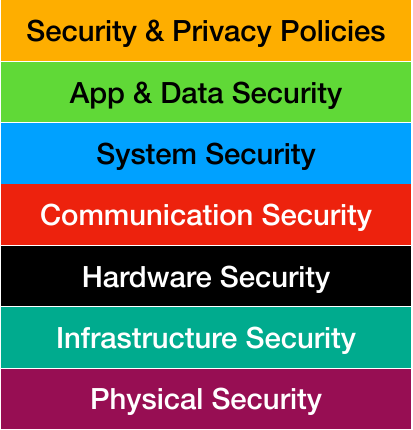

Security can be thought of in terms of a layered architecture. Anyone using a computer should be concerned about the security, privacy, and protection of digital assets (such as cryptocurrency properties). We need to understand how security and privacy are affected to address this concern. As the image below shows, the first concern comes from the security and privacy laws, regulations, policies, and governance. To simplify the discussion, let us call these policies. A policy is made as a joint effort of domain experts, law-makers, and bureaucrats. Policies can affect how data is stored, how data is delivered, and how data is manipulated. Policies can also determine what type of software could be used for data protection. Next, privacy and security can be influenced by how application software is designed. Application security problems start with flawed engineering and coding practices. The use of vulnerable libraries, incomplete testing, and premature security analysis can lead to all sorts of security problems in applications.

Computer scientists are more often interested in the next set of problems at the system and the network layers. The system security problems can also result from flawed engineering and testing but have a more significant impact. A vulnerable system service, device driver, or kernel module can affect an extensive range of software applications and network layers. That said, networking software requires careful considerations in the proper implementation of protocols, cryptography, and reliable system software to protect data in transfer. Hardware security involves processor design failures, a backdoor in firmware, and unreliable hardware components. Infrastructure such as larger external equipment, switches, power grids, power generators, and cooling systems can profoundly impact security as they can all impact the system's availability and even integrity. Finally, physical security is a problem involving human-in-the-loop. Inappropriate physical authentication and access control can lead to physical intrusion, thus undermining the security and privacy of systems and data.